CircleCI Breach Cybersecurity Incident Hunting Guide

On January 4, CircleCI published a statement announcing the investigation of a security breach. In this technical blog, we will share how to hunt for malicious behavior that may be caused by this incident and affect not only your CircleCI platform but other third-party applications that are integrated with your CircleCI platform, using only the default logging configuration those services provide.

We chose popular SaaS and cloud providers GitHub, AWS, GCP and Azure for this Mitiga CircleCI Breach Cybersecurity Incident Hunting Guide.

What is CircleCI?

CircleCI is one of the world's most popular continuous integration and continuous delivery platform that can be used to implement DevOps practices, helping the development teams to release code rapidly and automate the build, test, and deploy cycles. CircleCI is available as a SaaS and on-prem.

What Happened in the CircleCI Breach?

On January 4, CircleCI published a statement announcing the investigation of a security incident. It is strongly advised to immediately rotate any and all secrets stored in CircleCI. Additionally, it is recommended to review internal logs for any unauthorized access starting from December 21, 2022. No further details of the accident have been made public as of January 8, 2023.

Here is the link to the CircleCI incident blog:

https://circleci.com/blog/january-4-2023-security-alert/

What Is the Potential Damage From CircleCI Breach?

While using CircleCI platform, you integrate the platform with other third party systems (e.g. SaaS and Cloud providers your company uses). Examples of such platforms include:

- GitHub, for enabling build triggers and GitHub Checks integration.

- Jira, for reporting the status of builds and deployments in CircleCI Projects.

- Kubernetes, for managing your Kubernetes Engine clusters and node pools.

- AWS, for building, testing, and deploying the code on AWS resources.

For each integration, you need to provide the CircleCI platform with authentication tokens and secrets.

When a security incident involves your CircleCI platform, not only is your CircleCI platform in danger, all other SaaS platforms and Cloud providers integrated with the CircleCI, as well, since their secrets are stored within the CircleCI platform and can be used by a threat actor to expand foothold.

Rotating any and all secrets stored in CircleCI is not enough! You must hunt for malicious actions done on all your integrated SaaS and cloud platforms to ensure your sensitive data did not get breached on the other platforms.

In the following blog, we chose a number of popular SaaS and cloud providers that may be integrated with your CircleCI platform, using credentials saved in the “Project environment variables” and “Context variables.” For each SaaS/Cloud provider, we explain:

- How CircleCI integrates with the service

- What are the default logs the service provides you

- What to search in the logs to hunt for suspicious behavior that may caused by CircleCI security incident.

How to Detect Which Services May Be Jeopardized?

Start by mapping the relevant secrets and the environments to which they are related.

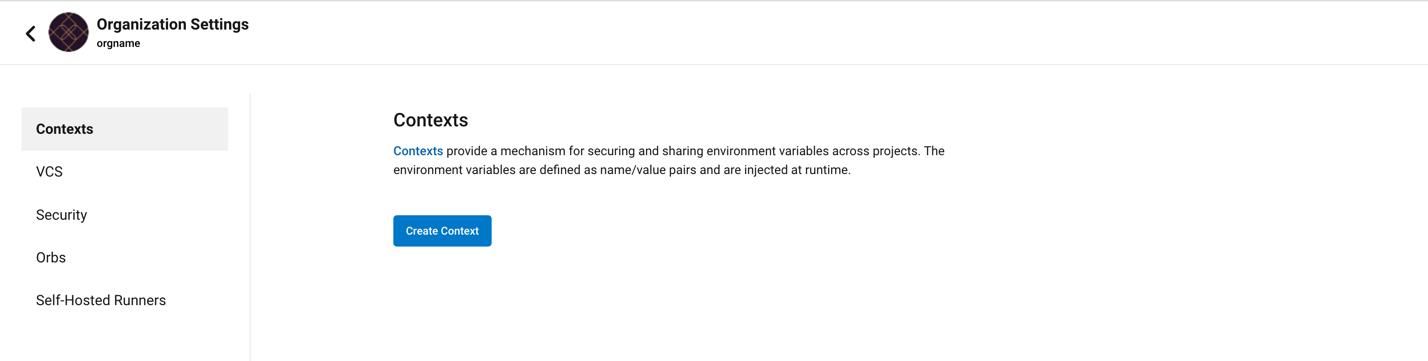

Under your CircleCI console, you can store secrets in environment variables in two ways.

The recommended way is using contexts (Organization Settings → Contexts → CONTEXT_NAME). You should connect every project to context. For each context, there are environment variables. Each secret is supposed to be under a variable. You should deduce what is secret by the names.

The other (not recommended) way is to store the secrets under project environment variables (Projects → PROJECT_NAME → Project Settings → Environment Variables). Also here, the name hints to the content.

By CircleCI recommendation, you should rotate all these secrets.

We highly recommend keeping it simple and accurate by creating a table of all your secrets rotation containing your secrets’ names, with “rotate” column and “severity” columns. Rotate them one-by-one and mark it in the table, and then check all the secrets’ permissions. Here. you should notice when the permissions are open more than they are supposed to be and based on that you should decide where you should invest your investigation time.

Hunting for suspicious activity from CircleCI Breach

As the investigation is ongoing, the details shared by the CircleCI security team are incomplete. To be on the safe side, we recommend looking for unauthorized suspicious activity in the CircleCI audit logs that could indicate active attackers, or bad actors with access to the CircleCI management console.

Logs to use

CircleCI’s audit logs provide information about actions taken, by which actor, on which target, and at what time. for example:

- action: The action taken that created the event. The format is ASCII lowercase words, separated by dots, with the entity acted upon first and the action taken last. In some cases, entities are nested, for example, workflow.job.start.

- actor: The actor who performed this event. In most cases this will be a CircleCI user. This data is a JSON blob that will always contain id and and type and will likely contain name.

- target: The entity instance acted upon for this event, for example, a project, an org, an account, or a build. This data is a JSON blob that will always contain id and and type and will likely contain name.

- payload: A JSON blob of action-specific information. The schema of the payload is expected to be consistent for all events with the same action and version.

- occurred_at: When the event occurred in UTC expressed in ISO-8601 format, with up to nine digits of fractional precision, for example '2017-12-21T13:50:54.474Z'.

For more information, please visit CircleCI documentation.

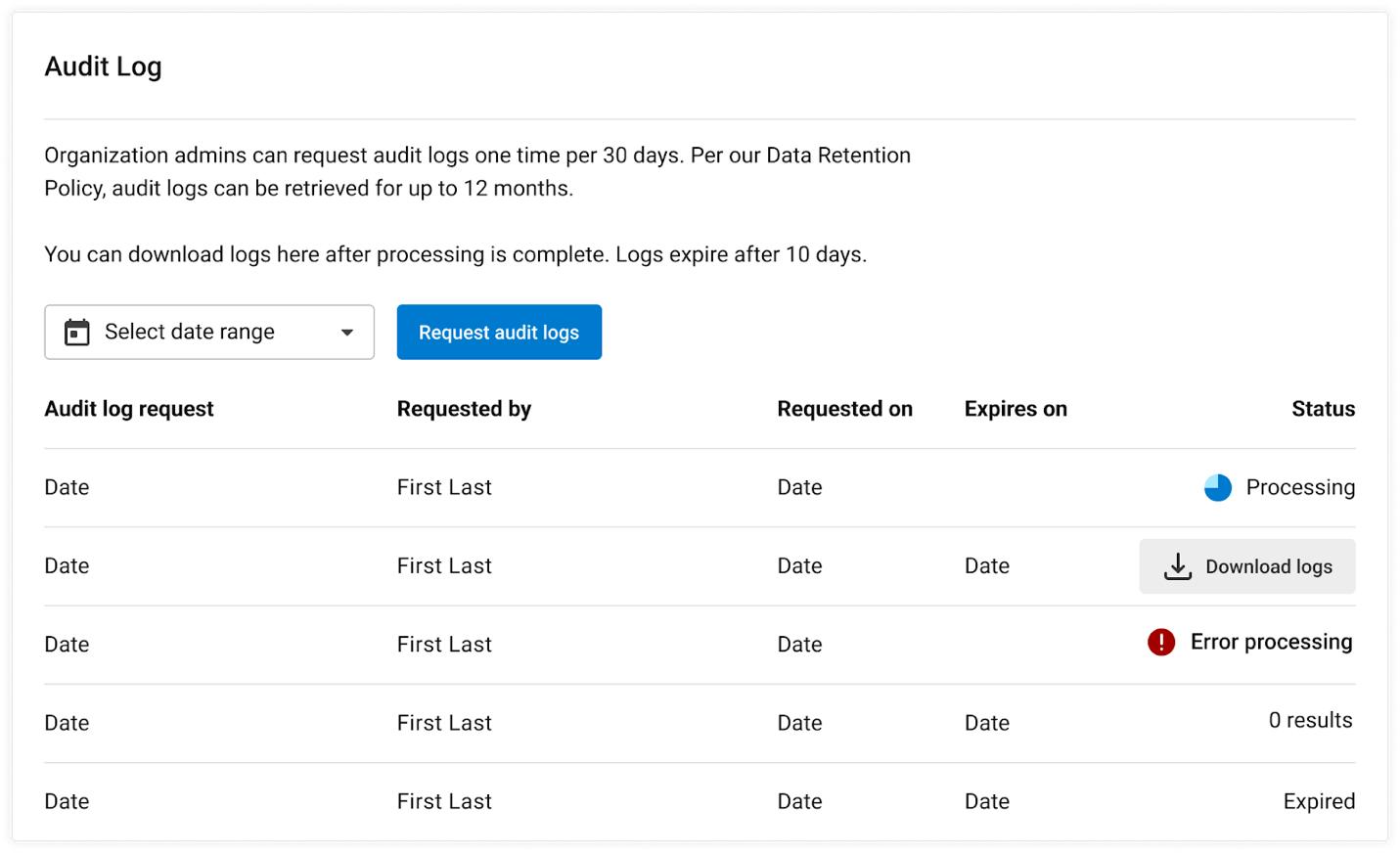

CircleCI organization admins can request audit logs from the management console. To do so, navigate to Organization Settings > Security to view the Audit Log section. Select a date range from the dropdown, then click the Request audit logs button. Please note that the relevant date range for the security incident is from December 21, 2022, through January 4, 2023, or upon completion of your secrets’ rotation.

What to look for

We recommend check for Action that could be abused by threat actors such as:

- Access:

∘ user.logged_in

- Persistence:

∘ project.ssh_key.create

∘ project.api_token.create

∘ user.create

Hunting for GitHub suspicious activity

How CircleCI integrates with GitHub

CircleCI authenticates to GitHub using PAT, an SSH key, or locally generated private and public keys. you can read more here:

https://medium.com/@praveena.vennakula/github-circleci-authentication-ef1e85d24b0

Related Logs

- GitHub Audit logs — The audit log allows organization admins to quickly review the actions performed by members of your organization. It includes details, such as who performed the action, what the action was, and when it was performed. The audit log lists events triggered by activities that affect your enterprise within the current month and up to the previous six months. The audit log retains Git events for seven days.

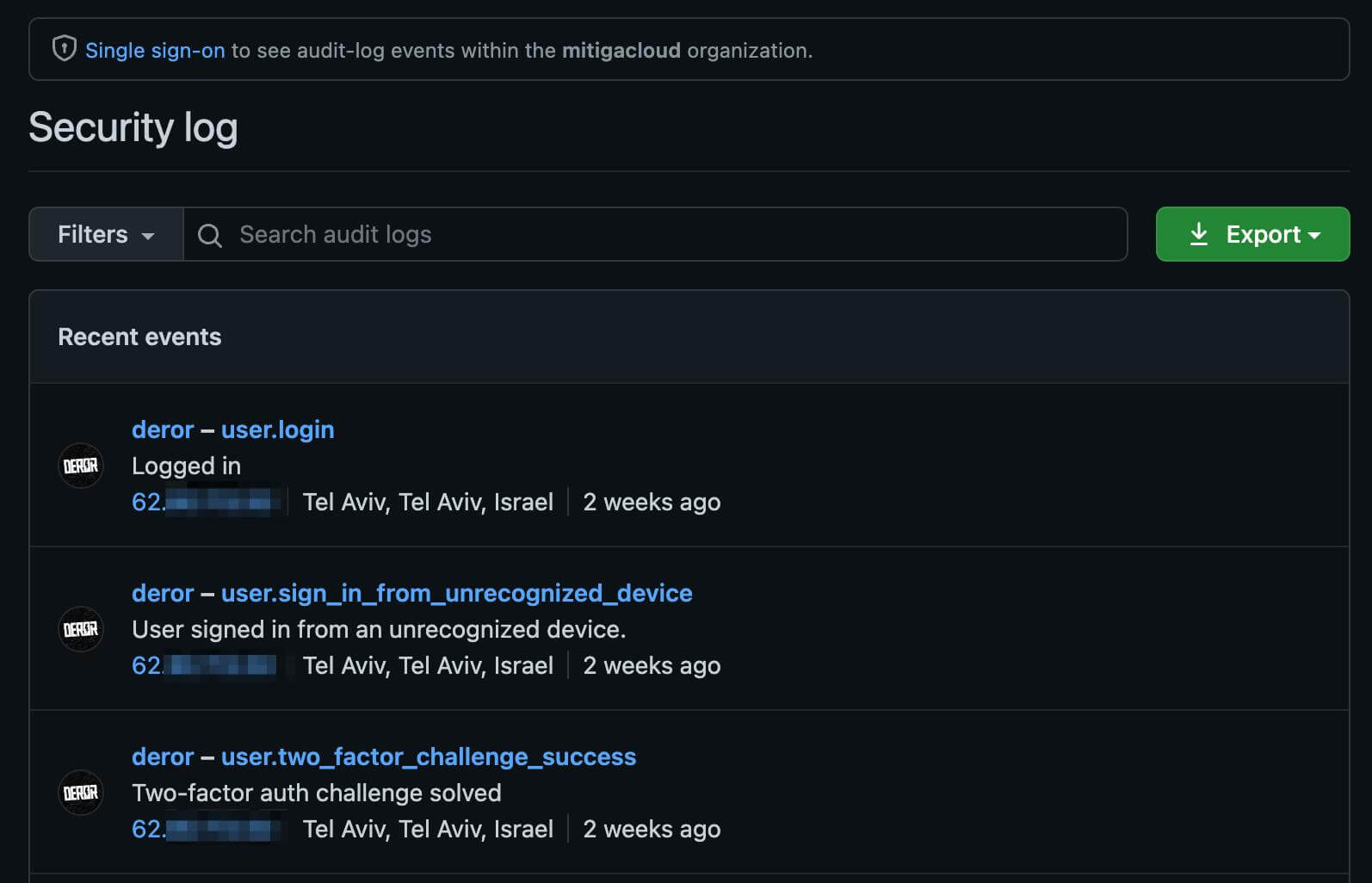

- GitHub security log — You can review the security log for your personal account to better understand actions you've performed, and actions others have performed that involve you.

What to look for

We want to hunt for suspicious activity originating from the CircleCI user. Note: in some cases, the key used by CircleCI is for the DevOps personal user, which may make the process of identifying malicious behavior much harder.

- suspicious actions, such as git.clone, git.fetchor git.pull

- Important note: by default, GitHub organizational Audit Log doesn't contain IP field. You can enable source IP by using the following GitHub guide: https://docs.github.com/en/enterprise-cloud@latest/admin/monitoring-activity-in-your-enterprise/reviewing-audit-logs-for-your-enterprise/displaying-ip-addresses-in-the-audit-log-for-your-enterprise

- GitHub Audit Logs do contain an “actor_location,” which is a GeoIP function that GitHub provides. You can use this field to possibly detect unauthorized authentications to GitHub

It is possible to authenticate to github.com using the CircleCI user and manually view the Security log available in the user settings. In this log, the source IP is enabled by default, and it is possible to hunt for abnormal connections, or potential attacks and operations originating from new IPs

Hunting for AWS suspicious activity

How CircleCI integrates with AWS

AWS can be used by CircleCI to deploy applications and code to services, such as S3, ECR, ECS and codeDeploy.

To connect to AWS, CircleCI must authenticate as an IAM user, with programmatic access by using an ACCESS_TOKEN and an ACCESS_KEY.

For more information, please refer to: https://circleci.com/docs/deploy-to-aws/

Related Logs

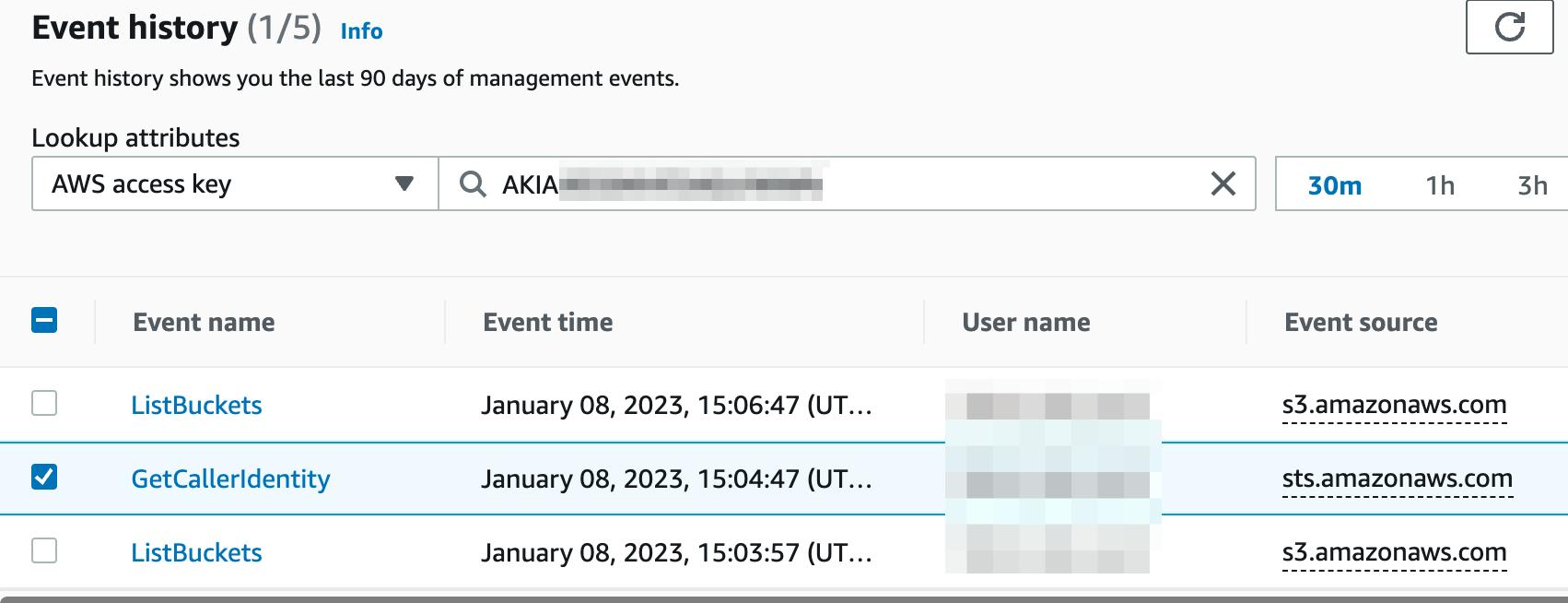

CloudTrail

AWS CloudTrail monitors and records account activity across your AWS infrastructure, giving you control over storage, analysis, and remediation actions. (Source: Amazon documentation).

By default, AWS CloudTrail collects management activity logs in the AWS account for the preceding 90 days. Using AWS CloudTrail logs, the investigator, or security team can see API management events actions.

Pay attention that data actions such as reader and writing to an S3 bucket won’t be monitored by default. To collect such logs, you will need to configure a dedicated CloudTrail trail.

What to look for

For that specific user, hunt for:

(you can use the AWS access key)

- Search for events that the CircleCI user shouldn't be performing:

∘ suspicious reconnaissance activity, such as ListBuckets GetCallerIdentitiy - Search for AccessDenied events

- Activity originating from devices:

∘ IP address that was not observed before possibly from abnormal countries, Proxy providers or VPNs

∘ Programmatic UserAgents, such as boto3 and CURL

Hunting for GCP suspicious activity

How CircleCI integrates with GCP

You can integrate your GCP environment with CircleCI to deploy applications using GCP services, such as Google Compute Engine and Google Kubernetes Engine.

To do that, you should create a service account and add the service account key to CircleCI as a project environment variable. You can employ user account, but it is highly not recommended.

For more information, please refer to: https://circleci.com/docs/authorize-google-cloud-sdk/

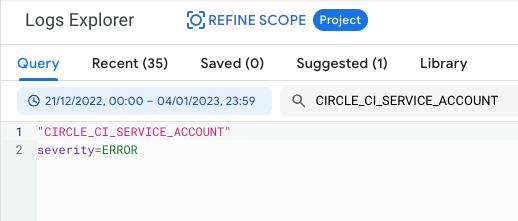

Related Logs

Cloud Audit Logs

Google Cloud services write audit logs that record administrative activities and accesses within your Google Cloud resources. Audit logs help you answer, "Who did what, where, and when?". (Source: GCP documentation).

There are three types of cloud audit logs you can use:

- Admin Activity audit logs — Admin Activity audit logs contain log entries for API calls or other actions that modify the configuration or metadata of resources. The default retention for this type of logs is 400 days.

- Data Access audit logs — Data Access audit logs contain API calls that read the configuration or metadata of resources, as well as user-driven API calls that create, modify, or read user-provided resource data. The default retention for this type of logs is 30 days. Keep in mind that Data Access audit logs (except for BigQuery Data Access audit logs) are disabled by default and you must explicitly enable them for advance: https://cloud.google.com/logging/docs/audit/configure-data-access

Policy Denied audit logs — Policy Denied audit logs are recorded when a Google Cloud service denies access to a user or service account because of a security policy violation. So, GCP may potentially save you a lot of trouble by detecting malicious/suspicious action on its own. The default retention of this type of logs is 30 days.

You can view audit logs in Cloud Logging by using the Google Cloud console (Logs Explorer), the Google Cloud CLI, or the Logging API.

What to look for

You should check which service account you used, and then for which resources it has permissions.

To check the permissions part, you can use an API or a CLI:

- API — searchAllIamPolicies

(https://cloud.google.com/asset-inventory/docs/reference/rest/v1/TopLevel/searchAllIamPolicies) - CLI — gcloud asset search-all-iam-policies

(https://cloud.google.com/sdk/gcloud/reference/asset/search-all-iam-policies)

In the query field, you should filter policy:SERVICE_ACCOUNT_EMAIL.

(If you usually use only the console, you can click on the CLI button in the right corner to use CLI online without any prerequisites.)

After you understand the permissions of the relevant service account, we recommend look at its behavior in the logs.

You can investigate the logs in Logs Explorer and search for abnormalities, such as an error severity record, weird timestamps, or unusual IP subnets.

Hunting for Azure suspicious activity

How CircleCI integrates with Azure

In CircleCI, you may use azure-cli orb

(https://circleci.com/developer/orbs/orb/circleci/azure-cli#quick-start) for automating Azure-involved workflows and jobs. To authenticate with your Azure resources, you need to provide to CircleCI configuration the Azure service principal credentials or Azure user credentials.

In this blog, we will focus on using Azure service principal:

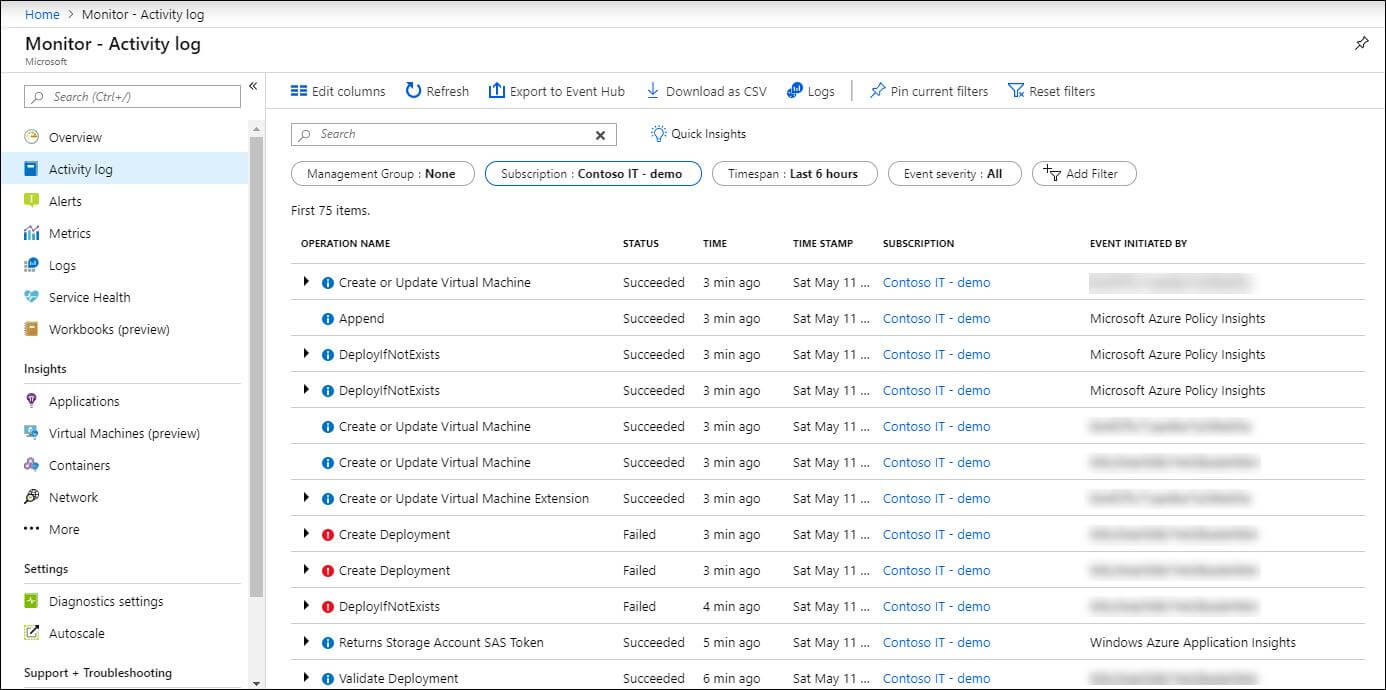

Logs to use

1. Azure Active Directory Sign-in logs

Reviewing sign-in errors and patterns provides valuable insight into how your users access applications and services. The sign-in logs provided by Azure Active Directory (Azure AD) are a powerful type of activity log that IT administrators can analyze. (Source: Microsoft documentation)

The default data retention for “Azure AD free” license is 7 days, and you can ingest the logs only by using the Azure portal. For licenses Premium P1/P2, the logs retention is 30 days, and you can ingest the logs using both the Azure Portal and the Graph API.

AD sign-in logs include “Service principal sign-ins” — they're sign-ins by any non-user account, such as apps or service principals.

What to look for

Using those logs, you can detect all the sign-ins done by CircleCI-dedicated service principals. In every sign-in log entry, check for abnormalities in the date of the sign-in and the source IP.

2. Azure Activity logs

The Azure Monitor activity log is a platform log in Azure that provides insight into subscription-level events. The activity log includes information, such as when a resource is modified, or a virtual machine is started. (Source: Microsoft documentation)

Using the activity logs, you have a clear view on any write operation taken on the resources in the subscription. Unfortunately, you don’t have a way to detect reconnaissance actions, such as listing the storage accounts, because the log doesn’t cover GET actions.

The default data retention for the activity logs is 90 days and you can ingest the logs using Azure portal, PowerShell, or the Azure CLI.

What to look for

Filter all the actions done by the CircleCI-dedicated service principal. Check if the service principal executed different actions on your subscription that the service account does not normally do.

Summary

This is yet another example of a potential supply chain attack that affects CircleCI customers. Hunting for malicious actions done by compromised CI/CD tools in your organization is not trivial, because their scope goes beyond that CI/CD tool and affects other SaaS platforms integrated with it.

This is why mature organizations adopt a holistic Breach Readiness Approach, maintain a Forensic Data Lake, and team with a SaaS- and cloud-focused IR partner to assist them in such inevitable cases. That IR partner should integrate in advance and provide not just expertise, but also purpose-built technology and cloud forensics capabilities to mitigate scale, retention time, and throttling issues.

As part of our IR² Cloud Readiness solution, Mitiga natively connects with your Cloud providers and SaaS services to help customers quickly recover from a cybersecurity incident. Additionally, IR² continually runs threat hunts, such as the CircleCI breach, against customer forensics data to either provide a “clean check” or Incident Response based on our ever-expanding Cloud Attack Scenario Library (CASL).

LAST UPDATED:

June 23, 2025